Website Audit

7 Tools That Will Help You Find Duplicate Content On my Website

The presence of duplicate content on your website can translate into a compromised search engine ranking. The most important search engines like Google and Bing employ a sophisticated and astute strategy to reward web pages.

With the highest quality of unique content by adding them to their indices. While eliminating the web pages with exact or “appreciably similar” content from the SERPs.

The following article supply an overview of the duplicate content. How it can affect your position on the search engine listings.

We have also arranged a comprehensive list of tools you can use to ensure that your website adheres. To the search engine guidelines and provides authentic content to the users.

What is duplicate content, and why does it matter?

The article “Duplicate Content“ on Google Search Console Help Centre states that:

“Duplicate content refers to substantive blocks of content within or across domains. That either completely matches other content or are appreciably similar.”

Duplicating parts of content like a block-quoting text from another source (as done above) or e-commerce websites quoting suppliers provided generic product descriptions are inevitable.

However, significant concerns arise when a substantial number of web pages on your site hosts content similar to other pages on the Internet.

Although Google does not impose a penalty on duplicate content, it affects your website’s search engine standing due to some factors:

How do duplication issues arise?

In most cases, duplicate content is not created deliberately by website owners. Despite that, a study conducted by Raven Tools estimated that 29% of the sites face issues of duplicate content.

Following are a few ways that lead to the unintentional generation of duplicate content:

URL variations

At times, the same page of your website is located in multiple places. For example, On an e-commerce website, the page is featuring. An item of women’s clothing on sale found — both in the “Women Clothing” section as well as the “Sale” section.

Session IDs are another reason for duplicate content. Various e-commerce websites involve the use of session IDs to track user behavior. However, when each user is assigned a different session ID. It creates a duplicate of the core URL of the page where the session ID was applied.

WWW vs. non-WWW pages

When your site has two different versions, one with and the other without the www prefix and each version have the same content, they both compete with each other for search engine rankings.

Copied content

There are a few websites that may conduct plagiarism by copying blog posts or republishing editorial content. A practice which resulted in content duplication and frowned.

However, various e-commerce websites also create duplicate content. When they are selling the same products on other sites, use the standard product description provided by the manufacturer.

How to fix duplication issues?

Following are a few technical solutions to tackle duplication issue:

Canonical URL

Using a Canonical URL on each duplicated page helps the search engine identify the original page that should be indexed and prevents the duplicate URLs from being registered. Hence, all the links, content metrics, and other ranking factors attributed to the original page.

On each duplicated page helps the search engine identify the original page that should be indexed and prevents the duplicate URLs from being registered. Hence, all the links, content metrics, and other ranking factors are attributed to the original page.

301 redirect

In most cases, implementing a 301 Redirect is the most suitable option to give precedence to the original page. A permanent 301 redirect directs. The users and the search engines to the original page regardless of the URL they type in the browser.

Applying a 301 redirect to the most valuable page. Amongst all, the duplicated pages stop the different pages from competing with each other for rankings. As well as strengthens the relevancy factor of the original page.

Meta Noindex, follow.

By including a Noindex follow Meta tag to the HTML head of a page, you can prevent the page from being indexed by the search engine. The search engines can crawl the page when they encounter the tag or header. They will drop the page to stop it from being included in the SERPs.

Rewriting content

A simple way to distinguish your content. Especially product descriptions, from other e-commerce websites, is to supplement the generic story with your wording.

That targets the specific audience and their problems. Include your unique selling proposition to the content, outlining the product, to compel the users to buy from you.

For a more comprehensive understanding of how duplicated content created and how you prevent them from building or how you can fix the issues, read this blog by Neil Patel, marketing expert, consultant, and speaker.

Tools to detect duplicate content

Before you can implement solutions to counter the effects of duplicate content, you need to audit the content of your website to determine what needs to fixed.

Discovering duplicate material can be an intensive and time-consuming process; the following tools can ease the process and help you find the duplicate content on your website:

Moz Site Crawl Tool

Moz is a well-recognized name in the field of SEO, inbound marketing, content marketing, and link building. In addition to these services, Moz also offers a Site Crawl tool, which is very useful in identifying duplicate page content within the website.

The tool recognizes duplicate content as a high priority issue since an increased ratio of the duplicate to unique content can significantly diminish a website’s credibility in the Search Engine Indexing.

The tool also allows you to export the pages with duplicate material, which makes it easier to determine the unchangeable that needs to implement.

The Moz tool is a paid tool for 30 days free trial period.

Siteliner

The Siteliner is a fantastic tool for garnering in-depth analysis of the pages which duplicated and the proximity of their relation. The device identifies not only the copied pages but also the specific areas of texts that replicated.

This feature is advantageous in some instances where large bodies of texts duplicated while the entire page may not have replicated. It also offers a quick way to gain insight on the pages which contain the most internal duplicate content.

Copyscape

Copyscape is one of the oldest plagiarism tools, which is mainly significant for auditing website content to identify external editorial duplicate content.

It crawls the website’s sitemap and compares the existing URL individually to Google’s index to check for duplicates on your pages.

It exports the data as a CSV file and ranks the pages by the duplicated content, where the most replicated page given the highest priority.

You can also purchase a subscription to the tool for a nominal fee to check for plagiarism in the content you create in Word document.

Screaming Frog

Screaming Frog is a frog that is highly famous amongst advanced SEO professionals because, also, to duplicate content, it identifies potential technical issues, improper redirect, and error messages, amongst other things.

It creates a comprehensive crawl report of your site and displays all the titles, URLs, status code, and word count, which makes it simpler to review and compare the titles and URLs to identify replicates.

The low-word-count pages can be considered for quality and rewritten in case of poor quality, while the status column can help identify pages with 404 errors that need to remove.

Google search console

Google search console can be used to discover various duplicate content issues in some ways:

3. URL parameters section can help you determine whether Google is having difficulty in crawling and indexing your site which alludes to technically-created duplicated URLs

Google search console offers a high level of investigative tools that are incomparable in quality to those provided by other search engines like Bing. However, compared to Screaming frog, it offers limited features and a lack of additional insight. The service is free to set up on your website.

Duplichecker

Duplichecker is one of the best free plagiarism checker tools which allows you to conduct useful text and URL searches to rule out plagiarism in your text.

The tool permits unlimited searches once you have registered and allows one free trial search. The entire process of scanning conducted in a short amount of time; however, the time varies according to the length of the text and size of the file.

This quality of the tool is inferior to the advanced paid tools like the one provided by Moz.

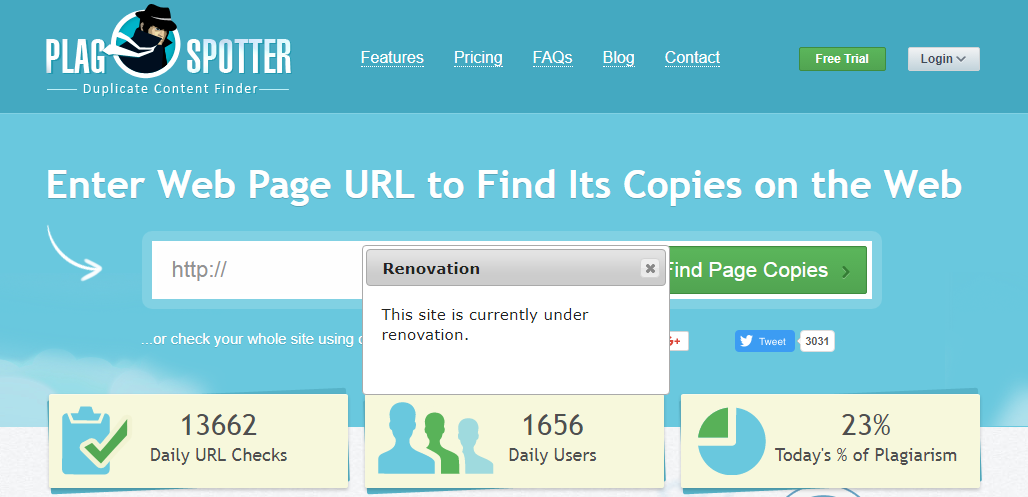

Plagspotter

Plagspotter is another plagiarism tool that offers free services. The URL search conducted by this device is thorough and quick, and yields sources of duplicated content for further review.

In addition to the free URL search, it also offers a plethora of valuable features in the affordable paid version, including full site scan, plagiarism monitoring, and batch searches. You can also sign up for their free 7-day trial.

Conclusion

Duplicate content, whether internal or external, can have a significant influence on your search engine rankings and the overall visibility of your website. Therefore, it is imperative to produce authentic, robust, engaging, and unique content to the best of your abilities.

Despite Google’s expertise in spotting unintentional duplication, the best practice is to keep your content plagiarism free through periodic website content audit by the creative tools and subsequent fixing of the detected issues.

RoboAuditor is an Embeddable SEO Audit Tool, which generates 4X more leads with the traffic you already have.

1 Comment

Ailsa

Nice article here, thank you!

There’s also a new tool called CocoScan (https://cocoscan.io) which analyzes your website for duplicate content – it provides a list of every URL that contains duplicate content as well as a score of how similar the content is between pages.